The #1 request every studio wants is the ability to run actions on their pipeline through the Shotgun web interface. Luckily SG allows this through the use of Action Menu Items (AMIs). The jist is: choose an Entity type in the web interface (ie Version), name your new Item (say Render Slates…), and setup an IP address to receive this information. SG then encapsulates the users’ selection of that entity type (either one or multiple entities) and sends the data to the receving machine, re-directing the users’ web browser in the process. With that data & connection, the machine at the other end can perform any action (say, submit all selected shots to the farm) and provide the end user with visual feedback (success, failure).

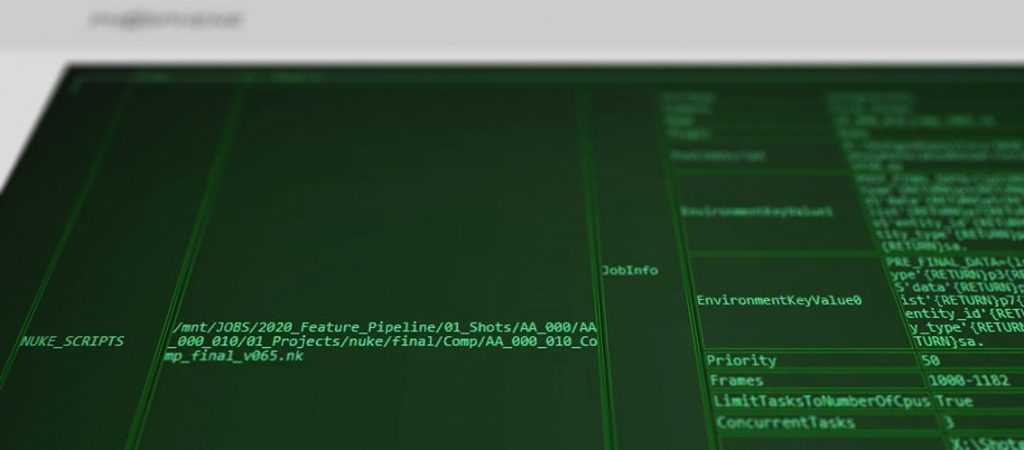

I developed a plugin-based AMI Server (philosophically similar to the Shotgun Event Server) for just this purpose, and I’m very happy with the result. The key is not only to perform the action, but to provide feedback to the user in a friendly and readable way; the user should be able to tell clearly whether the action has succeeded or failed. I even had a friend in CSS design whip me up a nice-looking CRT-style output because, why not 😉

Check out the video below to see it in action!